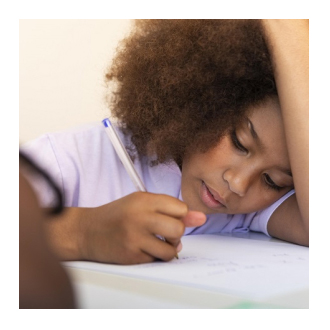

We are delighted to announce the latest article published today by Stéphanie Ducrot in collaboration with Laurie Persia-Leibnitz, Marie Vernet, Bruce Brossette, Chloé Prugnières and Jonathan Grainger in the International Journal of Educational Development.

This study reveals that “nearly half of the children in Martinique are at risk of reading delay, with errors three times more frequent than those of their peers in France.”

The CNRS wanted to highlight these societal issues, and sent out a press alert to journalists in its network (in French): https://www.cnrs.fr/fr/presse/apprentissage-de-la-lecture-des-inegalites-marquees-en-outre-mer

Article reference: Stéphanie Ducrot, Laurie Persia-Leibnitz, Marie Vernet, Brice Brossette, Chloé Prugnières, Jonathan Grainger. Children in French overseas departments are at a 3-fold increased risk of developing reading problems. International Journal of Educational Development, 2025, 115, pp.103277. ⟨hal-05043884v1⟩

Full-text article:https://www.sciencedirect.com/science/article/pii/S0738059325000756

Credits: L. Persia-Leibnitz