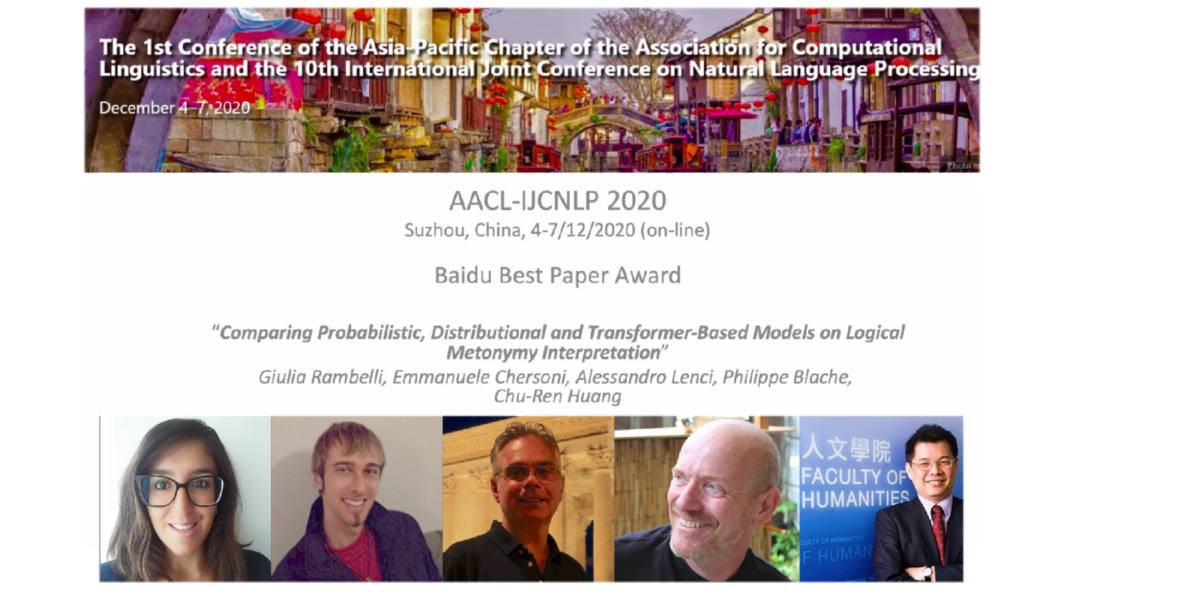

Giulia Rambelli, PhD student at LPL under the supervision of Philippe Blache and Alessandro Lenci (Pisa), has just obtained the “Best Paper Award” for the scientific article entitled “Comparing Probabilistic, Distributional and Transformer-Based Models on Logical Metonymy Interpretation” of which she is the first author, alongside P. Blache, E. Chersoni, A. Lenci and C.-R. Huang.

The award was presented last Friday at the AACL-IJCNLP conference held online December 4-7 (Suzhou, China). Congratulations, Giulia!

Abstract:

In linguistics and cognitive science, Logical metonymies are defined as type clashes between an event-selecting verb and an entitydenoting noun (e.g. The editor finished the article), which are typically interpreted by inferring a hidden event (e.g. reading) on the basis of contextual cues. This paper tackles the problem of logical metonymy interpretation, that is, the retrieval of the covert event via computational methods. We compare different types of models, including the probabilistic and the distributional ones previously introduced in the literature on the topic. For the first time, we also tested on this task some of the recent Transformer-based models, such as BERT, RoBERTa, XLNet, and GPT-2. Our results show a complex scenario, in which the best Transformer-based models and some traditional distributional models perform very similarly. However, the low performance on some of the testing datasets suggests that logical metonymy is still a challenging phenomenon for computational modeling.

_____________

Update February 2nd, 2021

Giulia Rambelli's distinction highlighted in the AMU Newsletter

In its latest issue of the Newsletter of AMU, Aix-Marseille University devoted a brief to Giulia Rambelli, PhD student at LPL, and the distinction received at the AACL-IJCNLP conference last December.

Link to the brief: http://url.univ-amu.fr/lettreamu_janvier21_n85 (p. 23)

See article on www.lpl-aix.fr: Best Paper Award : Giulia Rambelli, PhD student at the LPL - Laboratoire Parole et Langage (lpl-aix.fr)