We are pleased to announce the first study published by Giulia Danielou - a doctoral student at the LPL under the supervision of Clément François - in collaboration with the CRPN and the APHM. It has just been published in the European Journal of Neuroscience as part of the ANRJCJC BABYLANG project led by Clément François:

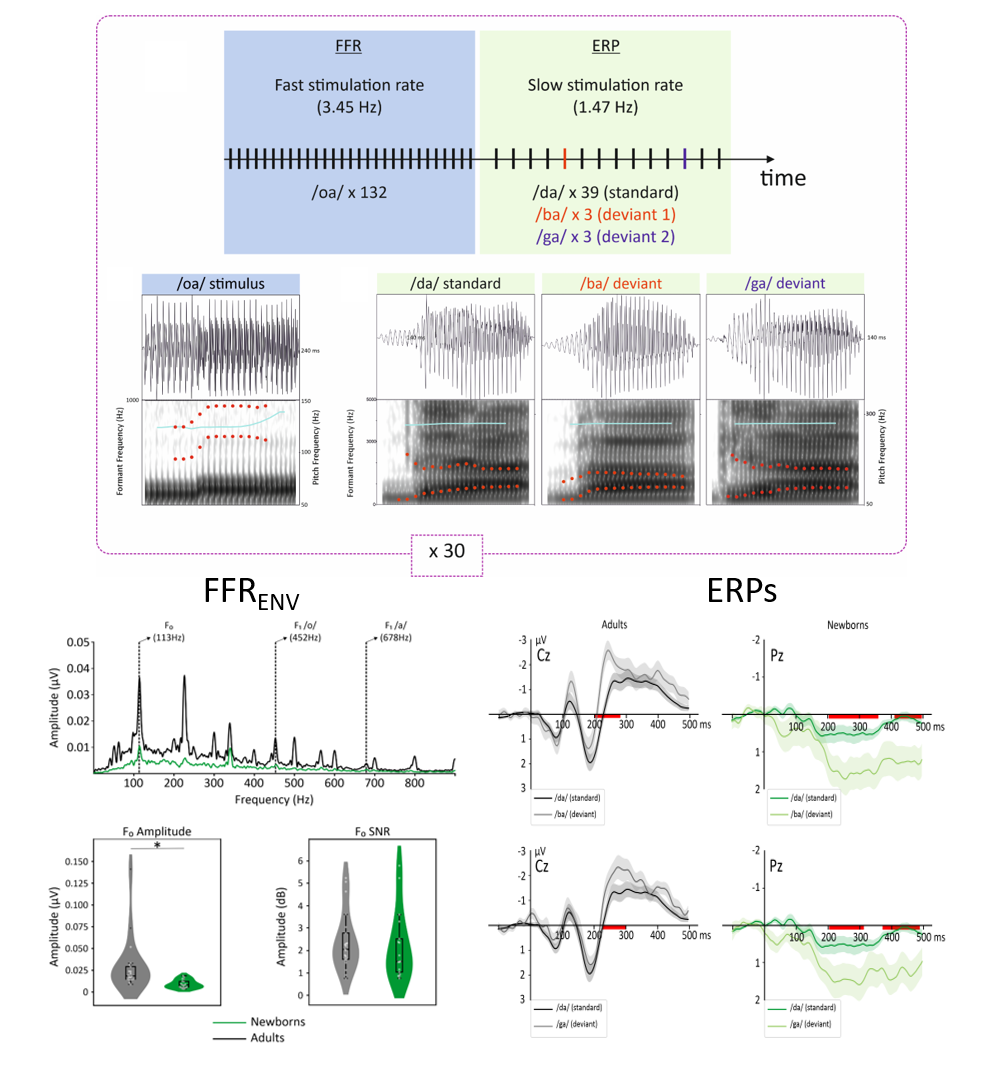

Reference: Danielou, G., Hervé, E., Dubarry, A.S., Desnous, B., François, C. Event-related brain potentials and Frequency Following Response to syllables in newborns and adults. European Journal of Neuroscience.

Full text article: https://doi.org/10.1111/ejn.70418

Summary:

From birth, newborns are able to distinguish speech sounds, an essential skill for learning to speak. But how does their still-immature brain process these sounds compared to that of adults? This study explores the brain mechanisms that enable newborns and adults to perceive syllables such as /ba/, /da/ and /ga/. To do this, they recorded auditory evoked potentials (ERPs) and frequency following responses (FFRs) in 17 three-day-old newborns and 21 young adults. The FFR reflects the encoding of acoustic characteristics of sounds such as fundamental frequency or formants, while ERPs measure the brain's response to category changes in a sequence of repeated syllables. The results confirm that babies are born with brains that are already sensitive to speech sounds, but that their processing is less accurate than in adults. This study confirms the key role that experience and brain maturation play in language acquisition, opening avenues for better understanding the influence of the environment on brain development.

Credits: Authors