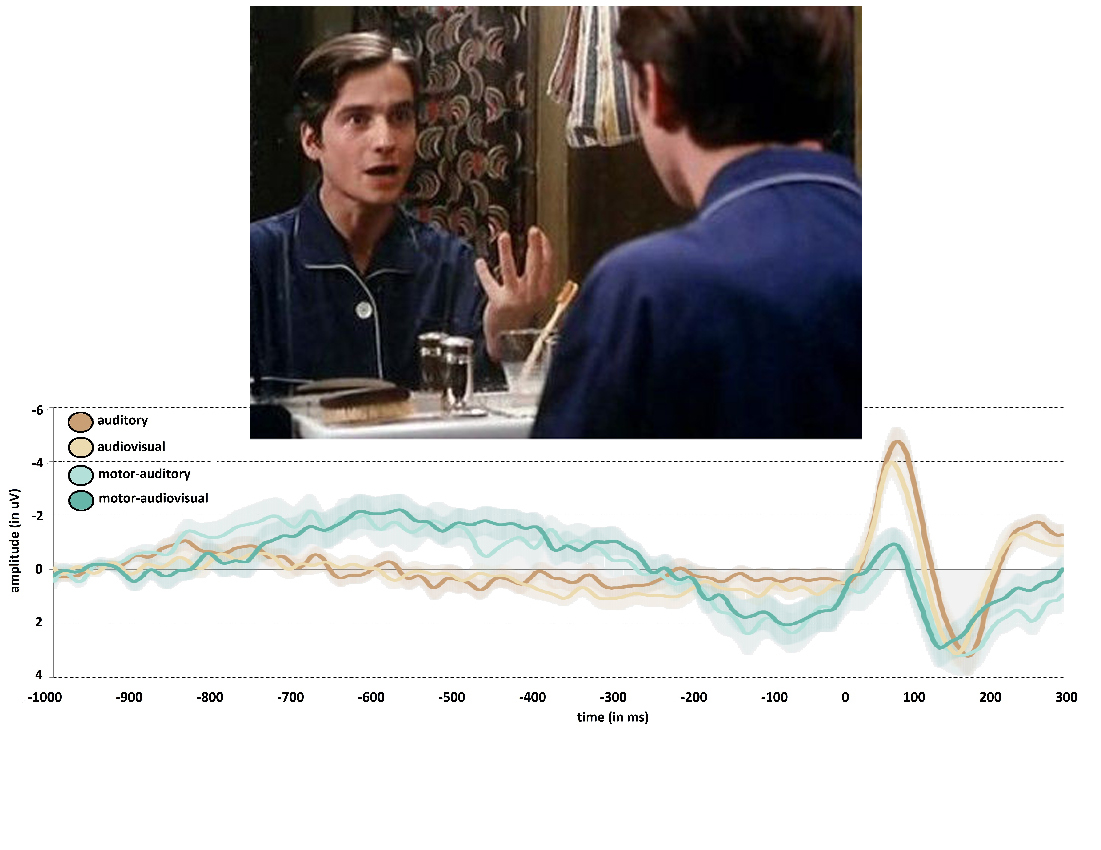

Marc Sato, CNRS researcher at LPL, has just published an article in the Cortex journal on the distinct influence of motor and visual predictive processes on auditory cortical processing during speech production and perception.

Reference: Marc Sato. Motor and visual influences on auditory neural processing during speaking and listening. Cortex, 2022, 152, 21-35 (https://doi.org/10.1016/j.cortex.2022.03.013)

You will find the full text of the article under this direct link or via the AMU search interface.

Photos credits: Antoine Doinel